Who is this blog for?

This series provides a comprehensive journey, starting with a foundational understanding of generative AI and its ethical considerations. It then bridges the gap by showcasing Copilot for Microsoft 365, a practical example of generative AI in action within your existing workflow.

As generative AI becomes more prominent in the business world, ethical considerations rise to the forefront. This blog delves into the importance of responsible AI practices, exploring key areas like bias, transparency, and data privacy.

In our previous blog post, Demystifying Generative AI: What is it and Why Should You Care?, we provided a foundational understanding of this technology. Building on that knowledge, this blog equips you with the tools to implement responsible AI practices and harness its power ethically. Our next blog post, Copilot for Microsoft 365: The Art of the Possible with Generative AI, showcases a practical example of generative AI in action. This powerful tool, designed for Microsoft 365, utilises AI to automate tasks and unlock new possibilities within your existing workflow.

Read time: 7 minutes

—

Adopting a Responsible AI Approach

Welcome to the second blog in this series! So far, we’ve introduced and hopefully demystified generative AI. This piece will build on this and touch upon our fundamental responsibility to ensure the power of generative AI is harnessed safely and ethically. Failing to carefully govern and guide the trajectory of such an immensely powerful technology could lead us down a sinister path.

We’ll focus on the importance of driving a responsible AI approach at an organisational and personal level to ensure the ethical adoption of generative AI. Firstly, however, let’s look at the need for a unified, collaborative approach across our global leaders.

AI Safety Summit

In early 2023, KPMG surveyed 300 IT decision-makers in the UK and USA on responsible AI. Of those surveyed, 87% believed that AI-powered technologies should be subject to regulation, with 32% believing that regulation should come from a combination of government and industry.

The UK government then announced that rather than appoint a new single regulator, existing regulators would be tasked with formulating their own approaches relative to their sectors. This means building upon the legal foundations already drawn out in their sectors rather than creating new laws.

However, the argument for this approach is that without formalising core regulatory principles across the board, as GAI continues to evolve at an exponential rate, the UK’s approach may include significant gaps, which could make it difficult to govern appropriately, given the rapid growth rate.

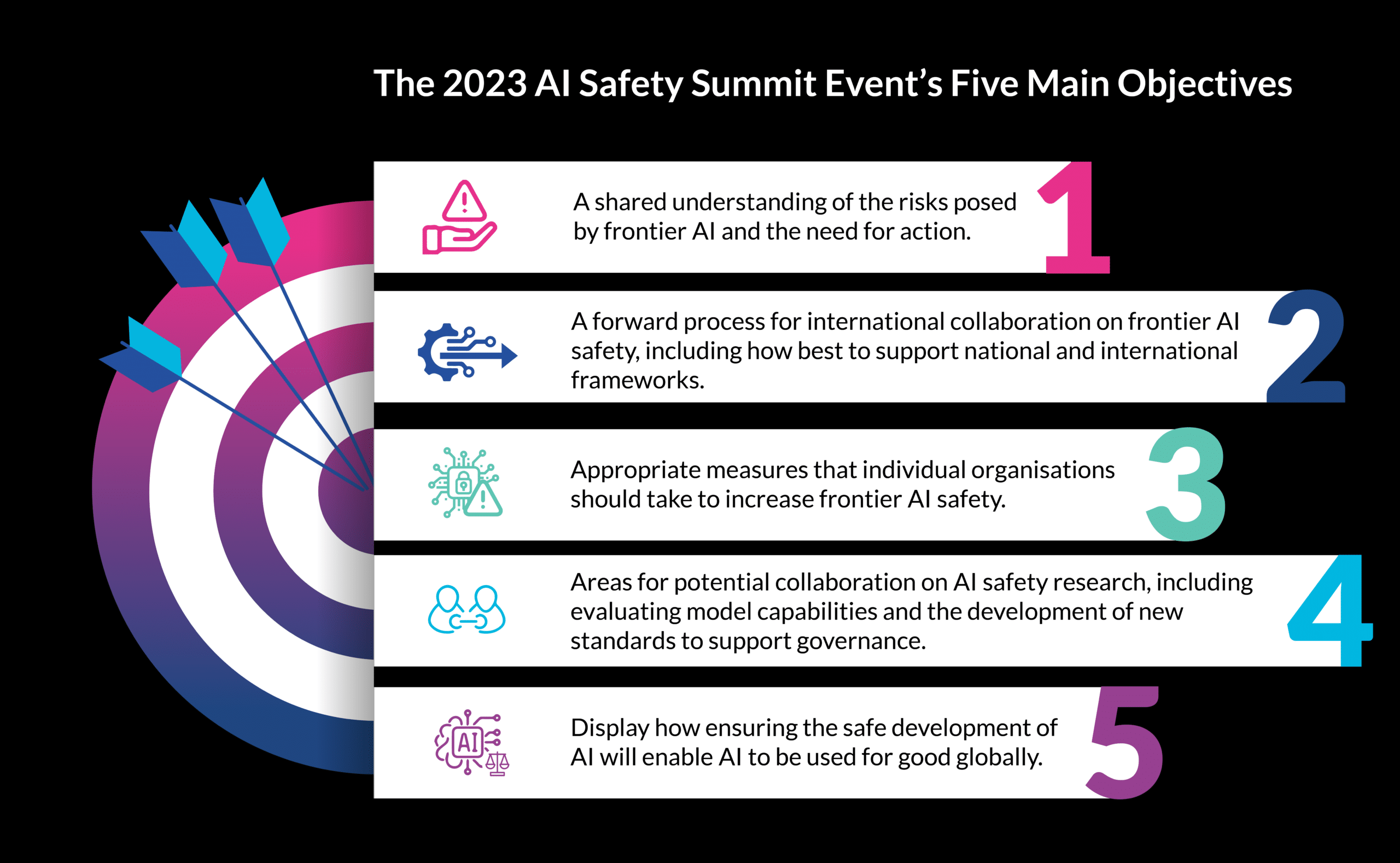

This has since led to the AI Safety Summit, held on the 1st and 2nd November 2023 at Bletchley Park, Buckinghamshire, and hosted by Rishi Sunak, the UK’s Prime Minister.

This was the first global event of its kind, bringing together international governments, leading AI companies, civil society groups, and research experts to consider the risks of AI, especially at the frontier of development, and discuss how they can be mitigated through working together on a global, common goal.

The summit’s purpose was a pre-emptive discussion to ensure clear alignment on the opportunity, but in equal measure, the risks posed by ‘frontier AI.’ The UK government defines frontier AI as ‘highly capable general-purpose AI models that can perform a wide variety of tasks and match or exceed the capabilities present in today’s most advanced model.’

The event resulted in several actions, namely:

- The launch of the world’s first AI Safety institute in the UK, tasked with testing the safety of emerging types of AI and providing guidance and best practices for AI developers and users.

- The adoption of the Bletchley Park Declaration, a set of principles and commitments for ensuring the safe and responsible development and use of AI, signed by over 50 countries and 100 organisations.

- The announcement of a new AI Safety Fund, a multi-stakeholder initiative to support AI safety research and innovation, with an initial pledge of £500 million from the UK government and other partners.

- The showcase of various AI applications and projects that demonstrate the positive impact of AI on society and the environment, such as AI for health, education, climate change, humanitarian aid, and more.

- The event is due to be hosted annually, but will require continual collaboration between leaders in government and the tech industry to ensure transparency and alignment as the power of this technology inevitably evolves.

What is the Responsible AI framework, what are the key factors, and why is this important?

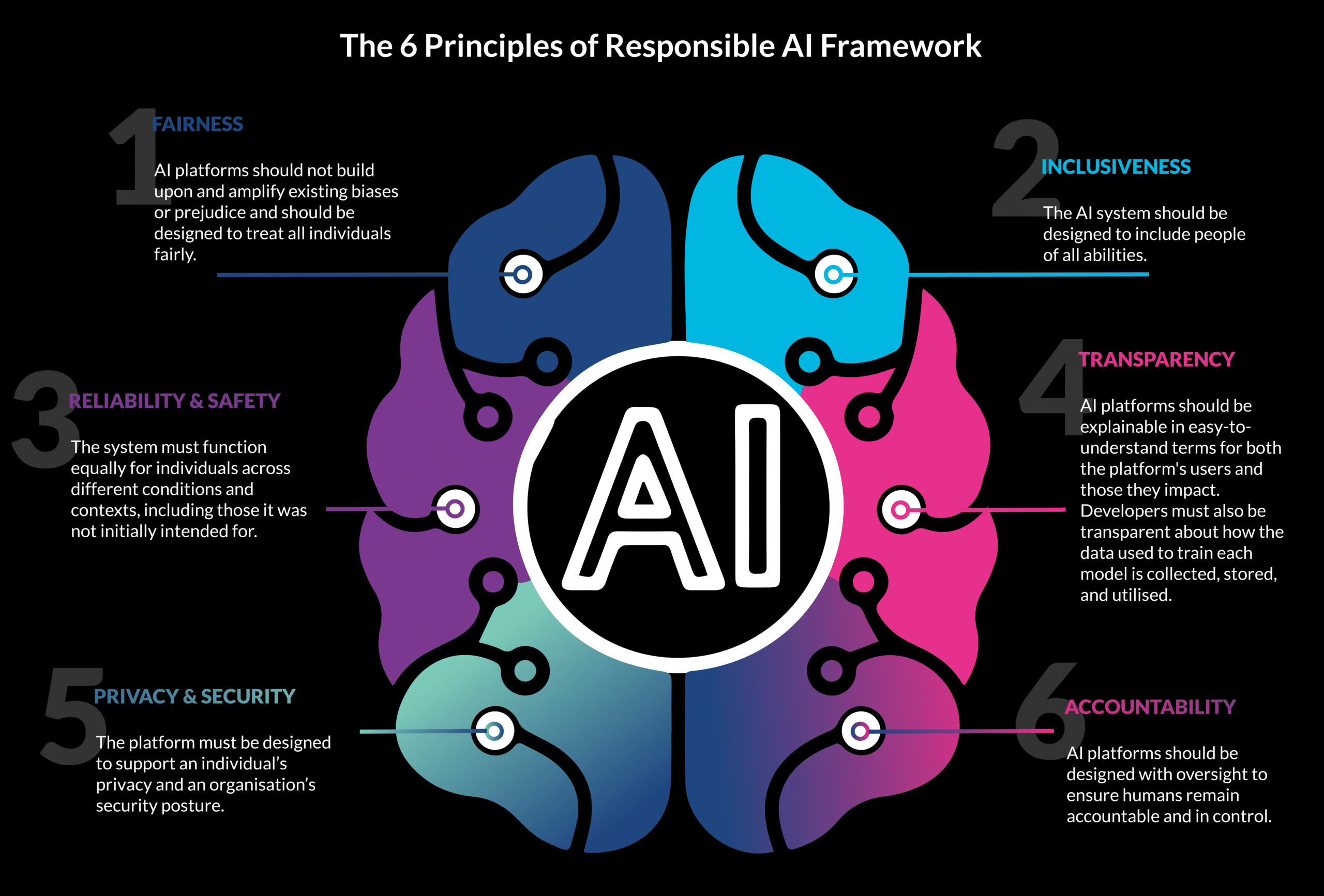

As AI continues to evolve and is used to make significant contributions to decisions that impact people’s lives, it becomes increasingly important to protect an individual’s rights and privacy. This is why the premise of a Responsible AI framework has been developed and widely adopted as the backbone of a secure, ethical collaboration between humans and machines.

Responsible AI describes the ethical, secure, and socially trustworthy use of artificial intelligence. The principles and practices outlined within the framework are designed to support the mitigation of harmful financial, reputational, and ethical risks associated with machine learning bias.

There is still some way to go before we see entirely trustworthy and fundamentally objective AI. Such models learn from existing data sets, where historical trends, biases, and prejudice may exist.

This is why organisations looking to leverage GAI, and especially those looking to develop new models and build their own custom AI-powered applications, should adopt six key principles to ensure the technology is being used in a socially responsible way.

What can I do to ensure our adoption of GAI is secure and responsible?

These are of course only initial suggestions for how you can start to think about an AI-powered future, but as the technology evolves, you must remain agile to evolve with it – updating your code of conduct frequently and learning from new instances and use cases.

To bring this series to a fitting end, I asked Copilot to do the honours…

In closing, as we navigate the transformative landscape of Generative AI, it’s imperative that we do so with responsibility and foresight. The recent AI Safety Summit, marked by the launch of the world’s first AI Safety institute and the adoption of the Bletchley Park Declaration, exemplifies the global commitment to ensuring the safe and responsible development of AI. The six principles of fairness, inclusiveness, reliability, transparency, privacy, and accountability serve as our guiding pillars in this journey.

As your organisation embarks on its own AI-powered future, consider the practical steps outlined – from establishing cross-functional working groups to fostering AI literacy, training, and education. The creation of an AI code of conduct, integration of Human-in-the-Loop processes, and the development of AI champions underscores the importance of a collective effort. Continuous monitoring, transparent communication, and a commitment to audit and learn will pave the way for a secure and responsible adoption of Generative AI.

In a world where technology advances at an exponential rate, our ability to adapt and evolve responsibly will define the positive impact we can make on society and the environment. Let us move forward with a shared commitment to harness the power of Generative AI for good, ensuring a future that is both innovative and ethically grounded. Together, we can shape an AI-powered future that aligns with our values, fosters collaboration, and leaves a positive legacy for generations to come.